COM-B - Feature redesign

Helping users track their behavioural change progress

When

October - November 2021 (4 weeks)

Product

MindGym’s Digital Experience

Team

Content Designer; Solutions Designer (Behavioural Psychologist); Dev. Representative; Illustrato

INTRODUCTION

Upgrading a feature

During my time at MindGym I took on various features of our digital initiative, MindGym’s Digital Experience (DXP), a self-directed learning platform for new managers. One feature that I worked on as the sole designer on a cross-functional agile team was to improve the COM-B experience. COM-B (Susan Michie, 2011) is a behavioural model that looks to measure someone’s capability (C), opportunity (O) and motivation (M), in order to change their behaviour (B). In the app, we ask users a set of questions to find out what is blocking them from successfully performing a skill - such as effective management of a disgruntled team member. We can then use those answers to prescribe information to help them overcome those blockers.

What it looked like

A version of this feature already existed. I was seeking to improve it based both on feedback from users, as well as our own assessment.

I made a user flow to visualise where COM-B sat in the overall experience: Firstly, after users select a skill, the app gets their baseline COM-B scores. It then takes them through a learning journey before it tells a user to go out and practice a skill in the real world. Once a user has practiced the skill, they then come back into the app to reflect on how they did. After a user has performed a skill three times we then ask them the same set of questions again to see how they have progressed.

The COM-B experience itself (highlighted above in pink) started with an introduction, followed by 12 questions to assess a user’s blockers and ended with a feedback page to let users know how they scored. We retained this general structure, but there were many things within each stage that we improved.

Introduction

12 Questions

Feedback

PROBLEMS

Removing the questions wasn’t an option. We needed the questions to track behavioural change, which was key to a) proving the value to clients, b) building motivation in users, and c) tracking the product’s effectiveness internally. So, what issues could we seek to improve?

Unclear Copy

The copy didn’t effectively explain the benefits of completing COM-B to users, including what it meant and why answering the questions would lead to success within the app.

Boring UI

The UI was unengaging and not aligned with our updated design system.

Inefficient UX

The time and effort it took a user to click through the 12 questions was inefficient and boring from a user experience perspective. Therefore, we saw a lot of users refreshing their browsers in order to bypass the questions.

Negative Inducing UX

Even after rearranging and randomising the COM-B questions, users were answering the questions at the end more negatively (between 40% to 72% more negatively). This implied that users were feeling more negative simply by going through our experience.

Uninspiring Feedback

For those who did answer all the questions, we received feedback that the “feedback” screen was unfulfilling and did not inspire them to answer the questions again — a key aspect of tracking their behaviour change over time.

Unsatisfying UX

For those who completed COM-B multiple times, there was no way for them to track their progress, which they reported was an unsatisfying experience.

UPDATED USER FLOW

In consultation with the solutions designer on my team, we identified two places to put COM-B in order to test behavioural change with users. This was based upon whether users reported back to us that they had performed a skill. If yes, they got one set of questions, if not, they got a different set of questions.

Below is the result of our redesign.

IDEATION

Taking into account the problems users faced and with the new flow in place, I began to explore the UI/UX further.

(UI experimentation on Figma)

Radio Button UX

A problematic aspect of the design was the Likert scale selection, found in each of the 12 questions. The radio button design slowed users down. Depending on screen size, users might not be able to see all of the options. They also had to click on the option and then ‘next’ twelve times, toggling between mouse movement and scrolling. Two effective alternatives were a slider, or arranging the scale horizontally.

(Researching & experimenting with different solutions)

Having played around with a lot of designs I eventually settled on the buttons over the slider because of possible usability errors with a slider on mobile phones. Given it was a web app, the act of attempting to interact with a slider could see users sliding the browser forward or back a page.

On top of that, since we saw so many of our users back out before completing COM-B, and a drop off in users’ scores towards the end of the questions, we wanted to speed up how quickly they were able to answer questions. One way to do this was eliminating the Next button. Instead, by clicking on an answer users would be taken to the next question. They could still click back in case they wanted to change their answer, but eliminating an extra click speeded up the time to complete COM-B and thus improve the user’s experience. The horizontal button design was much more conducive to this as well.

(Buttons passed the touch accuracy test on mobile)

One consideration with the buttons was how they would fare on mobile design. Research has shown that users have the lowest touch accuracy on buttons less than 42px. So I mocked up a design on a 320px width mobile screen to see if the design was feasible on the worst possible situation.

Normal Flow Design or Something Else?

(Example of ‘mission flow’ design with slider)

(Example of ‘mission flow’ design feedback page)

At the time that I was designing the COM-B updates, the rest of the app was having a UI upgrade. This included the general ‘mission flow’. Therefore, one thing that I experimented with was designing the COM-B flow to look and act the same as the general mission structure. Eventually we decided against this because we wanted COM-B to feel like it was something else. We also wanted it to feel impermanent, like it was sitting on top of the main bulk of the experience. So that users subconsciously knew they had to complete it in order to get back to the main content. Devs had already built COM-B as an overlay, therefore it made sense to keep it that way, but adding a backdrop to the design could elicit the right response in our users.

(Experimenting with different COM-B designs)

This led to what we called “the beer mat” design. To have COM-B look like a set of cards that users would flick through. Offering us an opportunity to add fun interactions to help engage users. As they flicked through the cards it would help them experience a sense of progress.

To Snooze or not to Snooze

One experience consideration we had was deciding whether or not to allow our users to “snooze” COM-B or not. In the prototype (below) we tested we had mixed feedback, but enough users suggested they would not tend to do COM-B more than a few times if it wasn’t necessary. In the end, in discussion with the solutions designer, we decided to make it mandatory at the start of a skill, and then every three times after performing a skill.

(Beermat exploration with different imagery and colour)

Experimenting with Imagery

Another way to increase engagement was with an updated use of imagery. We had an illustrator on the team who worked closely with us to create on-brand illustrations. Then it was my job to experiment and work them into the designs.

Deciding on Colour

All of the colours we used came from our brand guidelines in our design system. A big decision we made was to make the intro and feedback screens dark and the question screens light. This was so that they acted like bookends for our users. A visual clue that they had reached a different type of screen. Another benefit was that we found our illustrations were much more visually appealing on darker backgrounds.

(Experimenting with colour)

Content Considerations

Since users could go through multiple skills at the same time, in order for them to not read the same alerts twice, I knew I needed to design different flows and work closely with content to ensure the best experience. This was based on whether or not they needed to be introduced to the concept of COM-B.

(Tracking what the user knew at each point in the experience)

(How the experience differed when it was not the users’ first time seeing COM-B)

One of the opportunities we saw was to offer our users more information about the COM-B experience in order to realise the importance of engaging with it. So, I experimented with different designs that offered an optional way for users to “Learn more”. Specifically about “Why are we asking these questions?” and “What is COM-B?”.

Showing users their progress on the feedback screen

The first time users answered COM-B, we gave them scores of High, Medium or Low. However, every time after that we gave users a bit more information. Improved scores would encourage them to continue their behavioural change journey. I experimented with designs that showed their changes in both percentage change and graph form. The end result was to show a percentage change as a first step, with an eye to add graphs further down the line.

(Deciding to show users their progress in a percentage change or visualised on a graph)

TESTING & VALIDATION

After much iteration and conversations with designers across the company, I arrived at a flow of designs to test. I made a prototype on ProtoPie in order to test the changes along with some new interactions. The testing was done on Userlytics with six users in order to gain some qualitative data on the changes we implemented. The prototype included the onboarding screens in order to give users the proper introduction to the product. It flowed like this:

The big thing was to make users want to engage with COM-B multiple times throughout the experience. While the updates went a long way to improve the experience and make users want to engage with COM-B, there were still improvements that could be made.

We made 5 big updates:

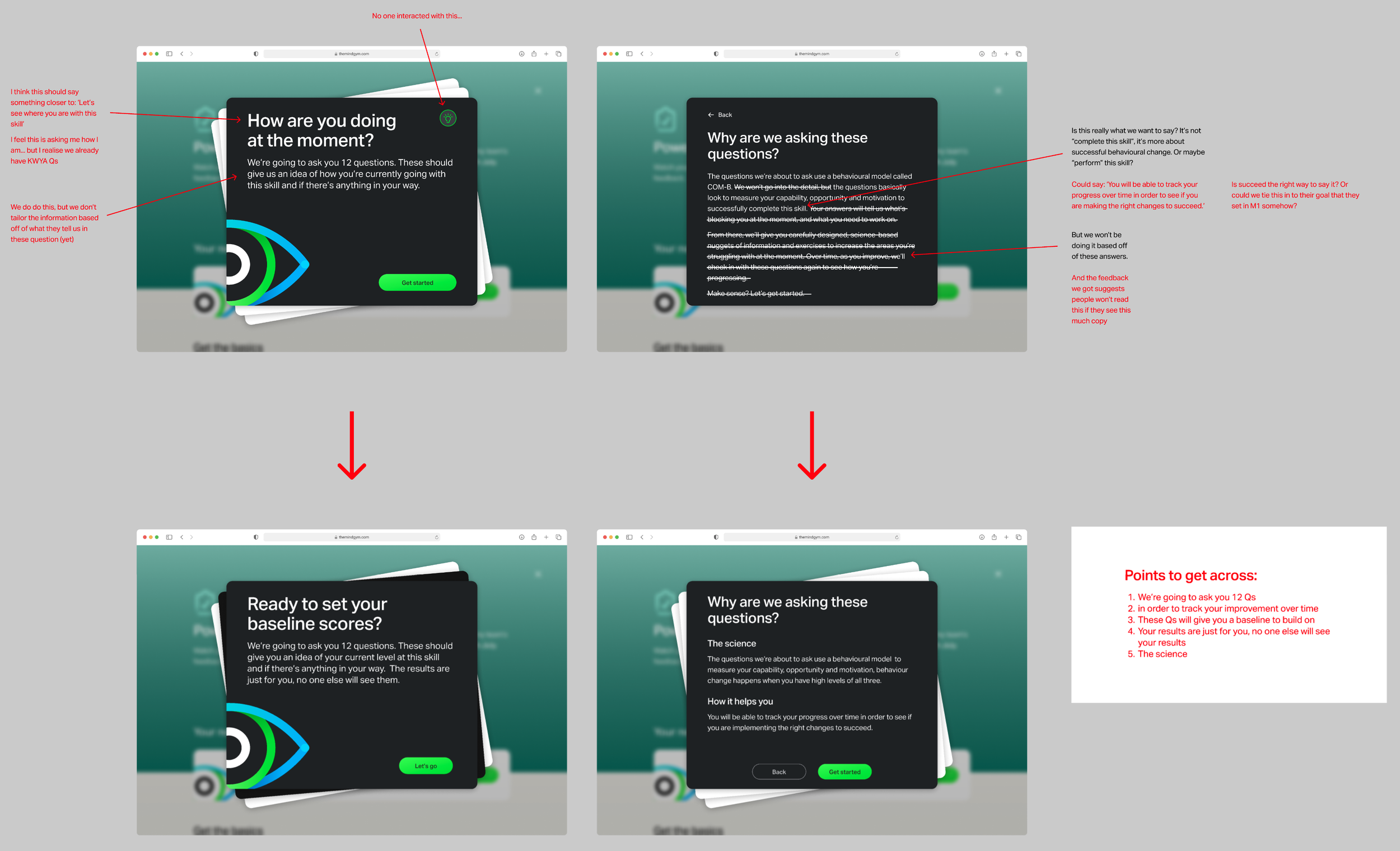

1. We improved the content on the intro and feedback screens

Users felt like this gave them a good sense of what the questions were about and why they were important. This was proved by the conversations I had with them asking questions like, “What are you expecting to see next?” and “Why do you think we are asking you these questions?”

There were some issues with understanding that the lightbulb icon on one of the screens was something they could interact with. So, this is something I knew I needed to make more obvious, perhaps with a pop-up introduction notification of some kind.

2. Allowing users to click through more easily

Users reacted fantastically to this and had no trouble interacting with the UI. They were confident in their selections and reported positive feelings to the card flicking interaction.

3. Showing users their progress on the feedback screen

This got mixed reviews. Some users found their progression very interesting... others thought that the questions were easy to game and not a fair representation of whether they had actually improved at something. I gave this feedback to the behavioural science team and it was something they were going to consider moving forward.

4. Updating the UI and interactions

Users reacted very positively to the UI and the interactions. Saying it felt “professional” and “easy to use”.

5. Allowing users to skip post COM-B

We received mixed feedback of how often users suggested they would take COM-B Qs again. However the ability to snooze COM-B was greatly appreciated, since it allowed them to interact with the content when they wanted to.

EVENT MODELLING

Off the back of these designs, we decided to try a version of event modelling as a team. It was a new process we were implementing, so we decided to use COM-B as a guinea pig. I took the lead on designing the structure and walking the team through the process. The idea being that across the multi-functional team we know what needs to exist, what data needs to be captured, what user research questions we are trying to answer, and what the development requirements and considerations are at each stage of the user journey.

The idea was that this would be our source of truth as we developed and expanded upon COM-B.

OUTCOME

Based on testing, we experimented with a few further design and content changes. We got rid of the lightbulb on the introduction screen, instead putting the second screen into the flow so that users couldn’t miss it. Changes were made to the content, since it was felt that some of the information was repeated in the feedback screen and was not the ideal information to offer users at that time.

We decided not to allow users to skip COM-B endlessly. Instead putting a 3x skip limit before forcing them to complete it before they could move on. But we were going to track the success of this decision on MixPanel as users interacted with our product more.

Even though the feedback screen had received mixed reviews, we decided to integrate the update into our build. Once we had more users using the product over time we could use that to base our future decisions on.